Implementation Details

How could this plugin makes the touch faster?

1. Modifying the touch entry point

Native side has a concept of "View" (Android : View iOS : UIView), this view could receive input via callbacks. We could not trace back any further "then who called this callback?" without going into OS code, so you can think that this is the beginning of it all. Unity receives touches from these native callbacks with its own subclass of native view, and process them into Input.touches.

Next, how could we override it cleanly and automatically without having to edit the generated game manually each build? So that Native Touch works as a plugin?

iOS

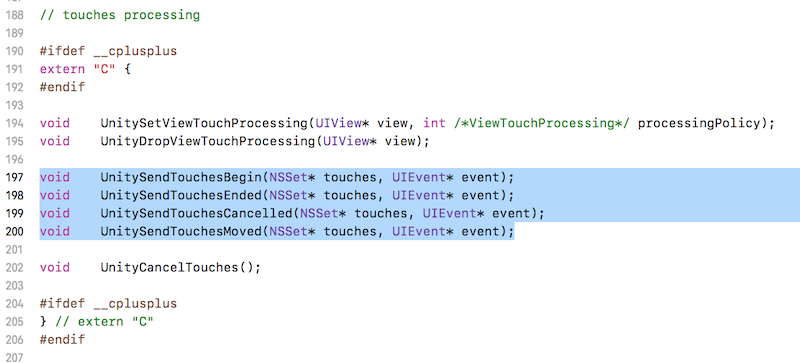

A class like UIView is a UIResponder. This class has the following touch entry point common to all iOS application :

- (void)touchesBegan:(NSSet*)touches withEvent:(UIEvent*)event;

- (void)touchesEnded:(NSSet*)touches withEvent:(UIEvent*)event;

- (void)touchesCancelled:(NSSet*)touches withEvent:(UIEvent*)event;

- (void)touchesMoved:(NSSet*)touches withEvent:(UIEvent*)event;If you override these events you can check the touch coordinates via touches parameter. That's it!

Also please notice that holding down finger without any movement afterwards will not trigger any more callback. You get one DOWN, then complete silence. There is no "holding down" callback (since callback is an event, not a state). This is important in using Native Touch and in programming input on native iOS/Android app in general. And you might not expect this having used to easy Unity Input.touches.

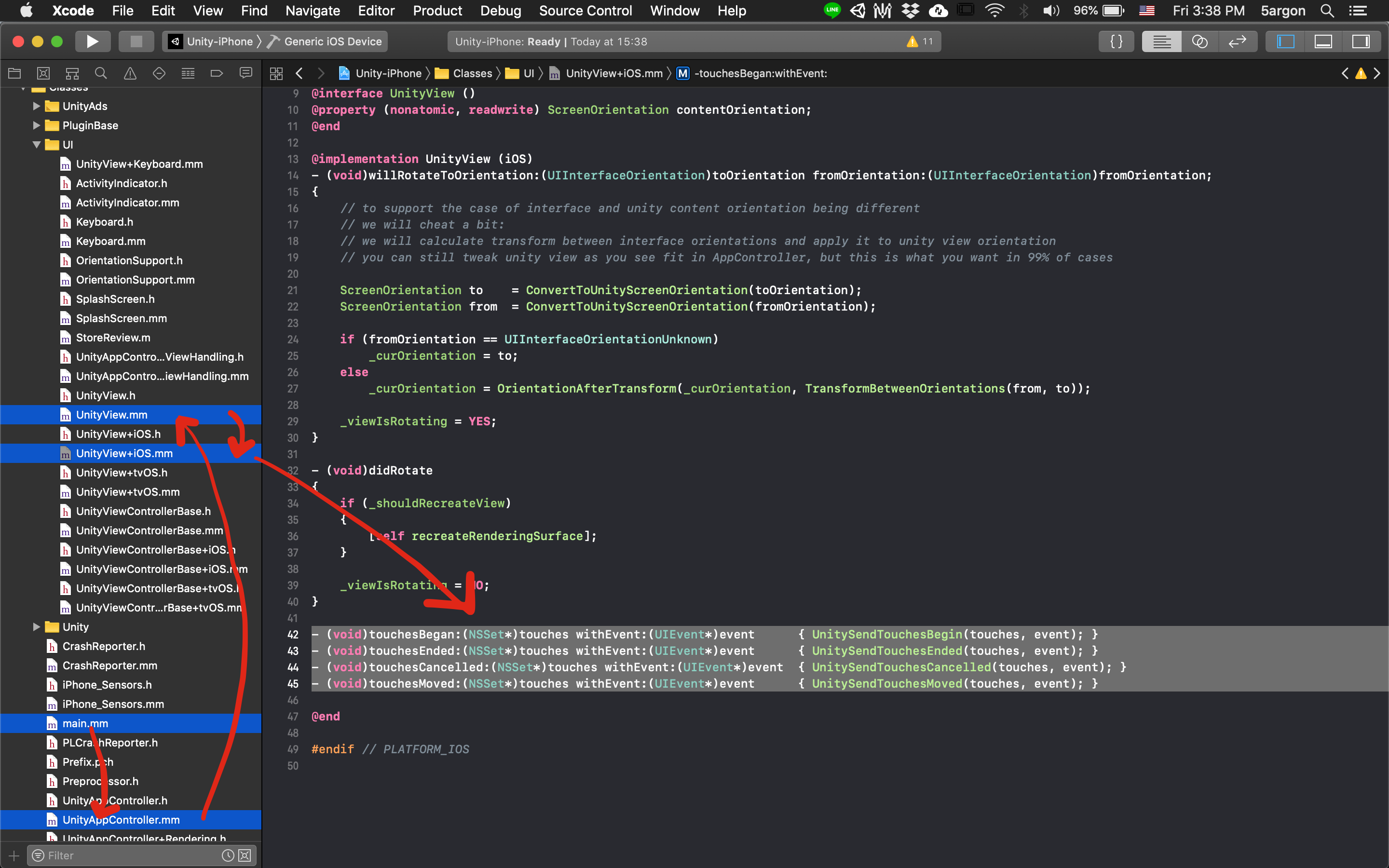

Let's follow from iOS entry point, main.mm

main.mm loads UnityAppController (UIApplicationDelegate is required for all apps. This is the one.) and this object contains UnityView as the main view that renders everything via OpenGL. UnityView is a subclass of UIView and sure enough it is the one that is handling touch events. The touch event handling are in category class file UnityView+iOS.mm, which expands UnityView.mm

Those 4 callbacks calls into Unity's own extern methods that links to C++ part of Unity. We assume that something beyond this point is slow. So let's make a new way other than doing this.

Overriding with a gesture recognizer

The fastest route to hack this is to just change those externs to your own functions but that would not be called a "plugin" if it requires you to change UnityView+iOS.mm everytime you build the game

Instead we use UIGestureRecognizer which can be attached to any UIView. We can subclass this recognizer to intercept touch event to see if it matches any custom "gesture" we designed. In this case, I will just subclass it without defining any gesture so it became just a generic touch handling class. The same touchesBegan and the likes are all available just like UIView. We then attach this to UnityView. And finally, we do our own things and finish it with calling Unity's extern methods so that the touch still continue through the usual path. "Our own things" will be explained shortly, after Android section. We have now finished modifying touch entry point for iOS.

Android

The earliest point of touch in ANY Android app is the view's viewGroup.dispatchTouchEvent(). (See these slides or this talk.)

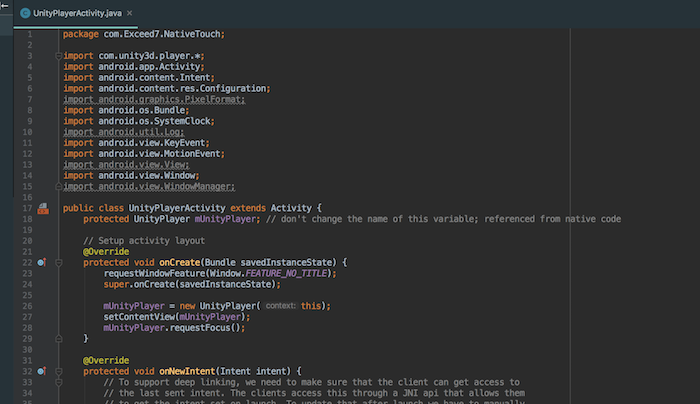

Unity Android game starts with UnityPlayerActivity.java like this.

This topmost activity is hosting the view, protected UnityPlayer mUnityPlayer; seen as a class member at the top. There are several touch handling events in there. But according to Extending the UnityPlayerActivity Java Code it is officially recommended to override this activity and not to mess with the inner UnityPlayer ones.

This top UnityPlayerActivity activity can intercept touch before UnityPlayer with @Override public boolean dispatchTouchEvent(MotionEvent event). If we call return super.dispatchTouchEvent(event); in this override then the touch will continue to the inner view. That means this is the first point of touch override that we are looking for.

However, we cannot change the starting activity in Unity. The most is just "side load" and another activity with merged AndroidManifest.xml as explained here (merge, not replaced). The entry point can never change from default. Plus modifying the starting activity might make plugin user feel uncomfortable.

Instead I choose to do it by adding OnTouchListener to the view inside that main activity from a custom class's static method, which Unity provides the static variable UnityPlayer.currentActivity for us to request the main Unity activity anytime. The attach and detach is clean and simple. The touch listener can now do our own things, before forwarding the touch to normal Unity way. We have now successfully override touch entry point for Android.

2. Writing touches to the "ring buffer"

We could just send all the touches to Unity via tons of callbacks, or one callback with tons of touch data. But remember that we are calling a callback delegate in C# from native side. This native-originated call could be potentially slow since at compile time the Unity code don't know about this code path, preventing optimization for example, IL2CPP to "bake" the call. Per my research, Android Mono has much better callback performance than Android IL2CPP thanks to JIT compilation. (iOS IL2CPP do not have this problem, as the call is statically linked. Android could only dynamically link things.)

Instead of tons of callback, as a base we will just write down those touches in a temporary storage called the ring buffer. Then we could still implement a lightweight callback over it, that does not actually contain any touch data but just saying "Hey, look at the ring buffer from here to here. Those are the new touches." to C#. We have reduced the frequency of callback and also reduced the weight of that callback's parameter to just a from-to integer. Or alternatively, you could go zero callback and just read this memory from Unity when you want.

Buffer is ring-shaped because I want to allocate finite space and have it used up and overwrite oldest touches in circle. This buffer is allocated by C# land, but C# had pin them and give the pointer to that memory area for the native side to write. This way we could talk to C# via memory, avoiding callback altogether! (Not to worry, I still implemented the callback way.)

A sufficient mutex lock has been implemented so when C# is reading the memory, it ensured that native side is not writing at the same time. This race condition is possible in Android, where native side could be writing to the ring buffer in other thread and main Unity thread is reading at the same time.

Bad ways of talking to C# from native side

Let's learn the other choice we are avoiding too. There is only one official way a native could talk back to Unity. That is UnitySendMessage(gameObjectName, methodName, stringMessage). (Available on Android also on the Unity view.) You might be feeling deja-vu, but the one in the managed side is gameObject.SendMessage(methodName, stringMessage). That message with 2 string arguments has a bad reputation of being very slow and prone to error as your code could not check a string. Plus there are many ways to NOT use this method and do the same thing.

But this 3 string arguments version UnitySendMessage(gameObjectName, methodName, stringMessage) is essential because the native side knows nothing about your Unity scene. With this you could reach to any GameObject in the scene without knowing that it is there or not with a string search. That is not all, it could reach any instance method with void return and one string parameter signature by string search again.

Seeing as it do a string search 2 times I could not bring myself to use this method. Also I would have to encode all the touch coordinates to that one string message argument. It would take sometime to both send and parse back in C# side. This is not the way to do callback efficiently.

So instead on start I have C# side send a static delegate pointer so that the native side could call. This is already better than send message, however we optimized further with writing data that could have been in the delegate call, to ring buffer. Further reduce the weight and frequency of delegate call. Read the research confirming that using a static pointer is 1.5 times faster than UnitySendMessage

And in C#, it further invoke event which contains your callbacks. This article confirmed that C# event is faster than UnityEvent, so we are going with that.

3.1. Using the touches via : callbacks

When you start Native Touch, you can set it up with some C# static methods as callback targets. After Native Touch finished writing all touches to the ring buffer and before sending those touches to normal Unity way it will call a single Native Touch's built in callback, which in turn call all your callbacks.

As explained, the callback is actually pretty light. Just 2 integers telling where on the ring buffer should C# read it out. But you don't even have to use those integers, Native Touch will do it and present you NativeTouchData in your callback one by one. (So your callback's delegate signature will be a single NativeTouchData not 2 int) So you can easily do anything you want on each.

Keep in mind, this is very important, the C# callback was initiated by whatever native touch handling routine at Java/Objective-C, and that is in whatever thread it was designed. On iOS it is on the same Unity's main thread, so no headache. On Android however it is in a different thread! You have to be careful what you are doing in that C# callback. You might be surprised when even Time.frameCount does not work on Android because it is not cross-thread compatible. Also say goodbye to using AudioSource as a result of that callback to speed up audio playing, because most Unity component is not cross-thread compatible either. (...but you could use my Native Audio, that's static, pure native, and cross thread compatible.)

There are many in Unity that sounds like it could work cross thread but cause a hard crash when you try to do so. AudioSource in particular collects all Play() commands and wait to mix and play them all at the end of frame. Or you could try setting uGUI .text on the callback on Android, and you now have 50% chance of hard crashing the game if your set happen to be while it is trying to render. (So don't do it)

(Fun fact : On Android what you called "Unity main thread" is actually a sub thread all along! Right at the start of Unity application in Android main thread, there is a code to spin up a thread using Runnable API, then the whole Unity is put to run on that new thread. Input handling on the view is actually on "Android's main thread", but when viewed from Unity side it looks like the touch is on sub thread.)

So uh, Android callback couldn't do anything?

Most Unity things is not usable cross-thread. It may seems like you couldn't do anything on Android callback other than store the touch then wait for the main thread to use them. That sucks right? But I have to leave the most absolute native way in for some user who manageed to pull something novel out. Because the point of Native Touch other than potential speed gain is to return the input control to you where it previously "sink" into the game engine's black box.

Checkout Jackson Dunstan's excellent article where he collected things that are job-safe.

For example you could try a simple variable value change. (That have to be somehow migrated to be visible from static callback scope) If that change happen to be just before it is being used in the Unity main thread, you may gain advantage. But if that is a reference type, use lock( ) keyword to prevent main thread accessing that at the same time in C#, or you may encounter unexpected crash. Just keep in mind this and you will be fine : "I am working in a concurrent code.. this may be in between Unity update, rendering, or whatever."

Callback could be the fastest, but because of these complexities I have prepared one other way to use those touches...

3.2. Using the touches via : ring buffer iteration

Because we had written to a ring buffer at native side as a base, while that memory area actually owned by C#, we can access the touches comfortably in our Update() code by directly inspecting the ring buffer! We can now altogether disable the callback, and remove cross-thread complexity.

This is recommended on platform like Android IL2CPP where the callback is somehow very slow. Yes, callback could be the most instant way of handling input but that is only when it is fast. On Android IL2CPP it could take up to 5ms-20ms per callback, cost you over a frame and you might even lose to Unity Input.touches!

You can disable the callback completely with noCallback option on starting Native Touch and check out the ring buffer manually instead. (Without this option, Native Touch will not allow you to start before you register at least 1 callback.) I call this the ring buffer iteration method. You can also choose to receive callback AND iterate the ring buffer later, but I am not sure that is a wise decision or not.

The static property NativeTouch.touches is your gateway to that ring buffer. (In the same fasion as Unity's Input.touches) But it would sucks if you have to pinpoint by yourself where is the newest touches you need to read from it, so I have prepared a wrapper called NativeTouchRingBuffer where it contains a logic to remember where on the ring buffer you had already read, and where is the latest touch so you don't iterate over it and ended up at the oldest touch sitting next to it. (Thanks to ring-shaped buffer...) And most importantly with a basic mutex lock, so you don't unknowingly read while native is writing and get strange data or cause SIGSEGV. The algorithm used is Dekker's Algorithm.

Instead of for looping like in the case of Input.touces, you instead while loop on nativeTouchRingBuffer.TryGetAndMoveNext(out NativeTouchData) and it would stop correctly by returning false. It even contains a mechanism for when you forgot to iterate for too many frames that the ring buffer already circled more than one round and overwrites where you was previously, when you start iterating the next time you would be jumping to start at the earliest touch available on the ring buffer. With method like nativeTouchRingBuffer.DiscardAllTouches() you can also choose to catch up the current position pointer without doing the move next multiple times.

This is where a difference between my NativeTouch.touches and Input.touches lies, you can read from Input.touches multiple times in multiple Update() and still getting the same thing as long as you are still in the same frame. With nativeTouchRingBuffer.TryGetAndMoveNext(out NativeTouchData) you are moving the cursor in that ring buffer and in effect "used up" the touch. You have to forward the get result if you want to use it elsewhere. Also remember that fundamentally NativeTouchData is the collected callback's event. So things like finger holding turned into only 1 DOWN event still applies. (As opposed to checking Input.touches and found DOWN state continuously over multiple frames as long as the finger is holding.) NativeTouch.touches also carry over to the next frame. If you didn't read it the cursor stays and new touches are added from the native side. Which could circle around and overwrite what you have to read eventually.

You can increase the ring buffer's size as needed on start option. I found that reading all of them every frame with all fingers mashing the screen could not even get close to 100 touches in 1 frame, and the default is currently 150.

Also, did we completely defeat the point of Native Touch with iteration instead of callback? No, for several reasons :

- Still could be faster : The data may still appear earlier or equal to

Input.touches. I had a report that some phone haveInput.touchesdata appears as late as 3 frames from the touch. You may see data appearing inNativeTouch.touchesearlier. It theoretically 100% cannot be later, as the code is instructed to write to ring buffer area even before handing the touch to Unity. - Still more data : The touches waiting in the ring buffer of

NativeTouch.toucheshave touch timestamps. Even if they arrive at the same time asInput.touches, you still gain some missing information that Unity discarded. - Still customizable and source available : The touches is "native". We can modify the plugin to include more from the native side that you fear Unity is discarding or processed out to fit the `Touch` data structure. Touch timestamp is one such thing that we added back. Unity's touch processing is in closed source. We could do nothing about it. The "native" in the name is guaranteeing you can do whatever native Android or iOS can do about those events equally. But Native Touch make them appears in Unity easier without any processing.

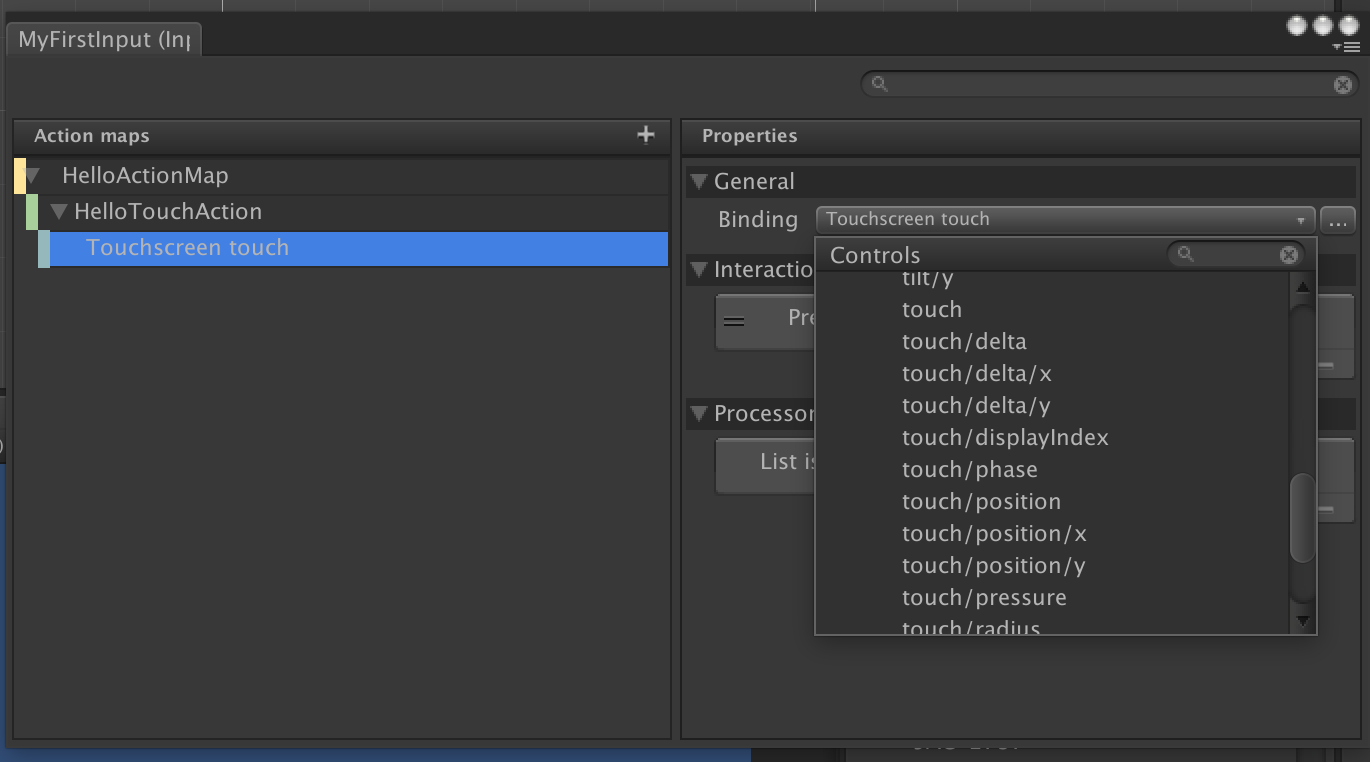

Extra : Unity's "New Input System"

Unity has been developing a "new input system" for approximately 3-4 years. Currently has no guaranteed release date but in "Feedback Build" section of the roadmap. It is now accessible from Unity Package Manager, the package is named "Input System". You can follow the development in this forum. The in progress documentation is located here.

The key points (You might want to read Callback Details page first) :

- The new input system highly encourage callback-based API instead of polling, just like Native Touch. You could see this expressed in places like InputAction.cs's code comment.

- The low level architecture diagram's "Input State Memory" is equivalent to Native Touch's "ring buffer" explained earlier.

- Touch received are frame rate independent, and with touch timestamp, like Native Touch. It is a much needed improvement to Unity's current input system.

- The new input system is highly flexible. With serialized configuration file you can make your game shift between just about any input scheme and type, and even between local multiple players. Native Touch is an inflexible alternative because it is hard fixed to work with only touch screen and even with fixed return data.

- The new input system will handle the difference of data between OS and make them consistent, handles composite action (like 2D joystick), filter noisy input, tries to accommodate all kinds of devices, etc. Native Touch is inferior because it does not alter any data if possible for the best latency, and not only that it supports strictly only the touch screen, but also just the iOS and Android. (Input System could support Surface's touch screen, for example.)

- Native Touch strictly cannot be slower than the new Input System since Native Touch is a hack that insert the callback at OS's first possible line of touch handling before even giving the touch to Unity. And that means before Unity can get anything to process for the new Input System. Also Input System wasn't designed for maximum speed input handling, but maximum flexibility. Native Touch has mediocre flexibility but provides shortest path possible of touch from native to Unity.

- In the hidden

UnityEngineInternal.Input;namespace there is a hiddenNativeInputSystemclass, with static callbacks like.onUpdatethat are called from the black box proprietary code. In this callback we could get an unsafe pointer to event buffer pointer, but this is as far as we could "go native" in terms of the new Input System. Also this memory buffer is undocumented, though you could try to reverse engineer by looking at how C# Input System package deliver the touch from this memory.

In summary Unity's new input system is definitely a big improvement while still following "develop once, runs everywhere" concept of modern game engine. Native Touch is still needed even in the presence of the new input system since it is more specialized for touch screen and it cuts away all the processing. (Basically just fast-forward the callback event to Unity in the form of NativeTouchData, so you could do whatever.)