Callback Details

This page is a bonus article explaining all annoying callback details of respective OS. I have researched into how they behave quite a lot so this might save your time and help you make use of all the events. These are something that native iOS/Android already have to deal with, now we go native from Unity so we also have to care about it. (It is not Native Touch's fault!)

iOS

iOS touch is callback based

Unity's Input.___ is not based on callback. It is based on state polling. Unity received the callback actually, but Unity make that into a state for us to easily check in-frame. Unfortunately adding some input latency in the process. But pure iOS input handling is based on callback with 4 types of callbacks : began, ended, cancelled, moved. Native Touch allows you to receive callback on C# like you were developing native Xcode app.

You used to be able to repeatedly call Input.touches to check for touches on every frame. With Native Touch, you could not poll that property anymore but must wait for that static call. This is a radical change and take a while to get right but this is how most native input handling works. See the next How To Use page for more gotchas you have to know.

One important not-so-obvious point if you are used to Unity is that :

- iOS touch DOES NOT provide finger/pointer ID. Unity track them and made them up.

- Thankfully iOS touch DOES provide previous point of each touch event, so you can infer that which touch is the continuation of previous touch.

- Other features available/unavailable you have to see the Reference page.

No stationary phase

Notice that there is no callback at all while the finger is holding still on the screen! You have to infer the "stationary" phase without those 4 callbacks. But iOS do have the stationary phase. Then when will we get this phase when there are no such thing as stationary callback?

The iOS's callback looks like this -(void) touchesBegan:(NSSet Multiple touches related only to this callback are in touches, all touches including ones not related to the callback are in [event allTouches]. Stationary phase can only be found in [event allTouches] in the case of multitouch. It will be assigned for fingers that currently holding down but no movement. However because it comes together with one of the 4 callbacks, we cannot get Stationary phase with only one finger. And also [event allTouches] can give us duplicate data. Imagine this frame 2 different touches move and up at the same time. You would get both moved callback and ended callback, both with exactly the same data set (moved and ended). Native Touch don't want to repeat unnecessary data, so it will use touches. You will never get stationary phase from Native Touch. (If you really want the allTouches version, you can uncomment a preprocessor in the .mm file. There's no new information to gain there.)

Touches are queued up

The callbacks do not fire arbitrarily but they come together in a certain interval. The touch screen hardware is capable of keeping all the action and turn them into a batch of callbacks. Look at this 1 finger log in relation to Unity's Update.

-- Callback type : Began --

XY [386, 1015] PrevXY [386, 1015] Began

-- Callback type : Moved --

XY [414, 943] PrevXY [386, 1015] Moved

Update

-- Callback type : Moved --

XY [477, 845] PrevXY [414, 943] Moved

-- Callback type : Moved --

XY [564, 744] PrevXY [477, 845] Moved

Update

-- Callback type : Moved --

XY [685, 627] PrevXY [564, 744] Moved

-- Callback type : Moved --

XY [802, 527] PrevXY [685, 627] Moved

Update

-- Callback type : Moved --

XY [902, 448] PrevXY [802, 527] Moved

-- Callback type : Moved --

XY [974, 395] PrevXY [902, 448] Moved

Update

-- Callback type : Moved --

XY [1010, 368] PrevXY [974, 395] Moved

-- Callback type : Ended --

XY [1014, 364] PrevXY [1010, 368] EndedReasonably, the previous coordinate and current coordinate link up in chains. But see that we can get upto 2 callbacks before each Unity Update. The last one even got a movement leading up to Ended at the same time. Each callback cannot produce more than one of the same finger. Look at this complex 3 finger sequence :

-- Callback type : Began --

XY [768, 403] PrevXY [768, 403] Began

XY [1072, 561] PrevXY [1072, 561] Began

-- Callback type : Began --

XY [523, 580] PrevXY [523, 580] Began

-- Callback type : Moved --

XY [767, 449] PrevXY [768, 403] Moved

XY [1072, 613] PrevXY [1072, 561] Moved

Update

-- Callback type : Moved --

XY [529, 637] PrevXY [523, 580] Moved

XY [775, 519] PrevXY [767, 449] Moved

XY [1079, 683] PrevXY [1072, 613] Moved

-- Callback type : Moved --

XY [549, 710] PrevXY [529, 637] Moved

XY [794, 599] PrevXY [775, 519] Moved

XY [1082, 761] PrevXY [1079, 683] Moved

Update

-- Callback type : Moved --

XY [572, 808] PrevXY [549, 710] Moved

XY [811, 708] PrevXY [794, 599] Moved

XY [1082, 864] PrevXY [1082, 761] Moved

-- Callback type : Moved --

XY [581, 921] PrevXY [572, 808] Moved

XY [1079, 972] PrevXY [1082, 864] Moved

-- Callback type : Ended --

XY [811, 708] PrevXY [794, 599] Ended

Update

-- Callback type : Ended --

XY [585, 924] PrevXY [581, 921] Ended

XY [1076, 975] PrevXY [1079, 972] EndedBefore the first update we have one began callback with 2 fingers and one another with the 3rd finger plus some movement for the 1st and 2nd finger which was down earlier. I observe that we can get up to 2 "chance" an Update with each chance iOS able to fire all kind of callbacks once. Before the first update we had 1.Began 2.Began+Moved. Before the last update we got 1. Moved 2. Moved+Ended.

The timing of iOS touch

"Timing" refers to when in relation to Unity's game loop which you will receive the callback. Benchmarking the static callback against Unity's normal Event Trigger to public method on an iPod Touch Gen 5, it is always 1 frame earlier.

For example if you have 3 scripts U1 U2 U3 update in that order set in Script Execution Order, with some other script routed to Event Trigger's Pointer Down.

Before when you touch the screen you will always get this :

[Frame 499] (U1) (U2) (U3) - [Frame 500] (Event Trigger) (U1) (U2) (U3)

With Native Touch iOS get this :

[Frame 499] (U1) (U2) (U3) (NATIVE TOUCH) - [Frame 500] (Event Trigger) (U1) (U2) (U3)

You have a chance to respond 1 frame earlier than Event Trigger, placed at after all Update at the end of previous frame IN THE MAIN THREAD. I see that this order is the same every time as far as I have tested. Also you can see that Unity's Event Trigger comes before all Update of all MonoBehaviour in the same frame, so that's the fastest input from Unity. Being in the main thread, everything works. You can set uGUI, you can play an AudioSource, etc.

How to make use of the touch and gain 1 frame advantage

Unity's input API has this rule : when you ask it, it answer you with the captured touch from the previous frame.

So, with the timing in iOS we get the callback at the end of the previous frame, there is no way we could meaningfully use this touch data later than this callback other than using it right there at the callback. All other scripts had already passed its own Update() and LateUpdate(). You must use this chance, if you just keep the touch data and use it in the next frame then you end up with a draw with Unity's touch because Unity will use the data from the previous frame.

But moreover, at this point it had already passed the chance to submit graphic data for rendering. (it is really at the end) So even if you use the time in this callback to set a position of something, that new position will not be reflected on the screen until the next frame.

But it is not all useless! Apparently it is still not too late to submit audio commands. Use audioSource.Play/PlayOneShot in the callback results in consistently faster audio playback from my benchmarking (and this is my favorite use case). If you want to prove this yourself, use PlayOneShot in the callback and again in your Update() script with an if on phase Began, you will hear 2 slightly offsetted sounds with 1 touch. Using Native Audio instead is even better as you can play an audio right there. And there might be other things you can do here. Remember that even if you have nothing to do here anymore, you still have that real timestamp to use in the next frame.

Special case of Moved-Ended

-- Callback type : Moved --

XY [631, 928] PrevXY [588, 919] Moved

XY [1389, 996] PrevXY [1364, 979] Moved <---- 1

XY [1172, 690] PrevXY [1136, 676] Moved <---- 2

XY [872, 643] PrevXY [848, 636] Moved

Update

-- Callback type : Moved --

XY [919, 647] PrevXY [872, 643] Moved <---- 3

XY [666, 938] PrevXY [631, 928] Moved

-- Callback type : Ended --

XY [1389, 996] PrevXY [1364, 979] Ended <---- 1

XY [1172, 690] PrevXY [1136, 676] Ended <---- 2

-- Callback type : Moved --

XY [692, 943] PrevXY [666, 938] Moved

-- Callback type : Ended --

XY [919, 647] PrevXY [872, 643] Ended <---- 3

-- Callback type : Moved --

XY [707, 948] PrevXY [692, 943] Moved

UpdateUsually previous coordinate will link into the current coordinate in chains. But in this rare case notice that Moved and Ended of each finger has exactly the same coordinate data. This not Native Touch's bug, what you get is completely iOS native. So if your algorithm is looking to pair the last touch's position with the present touch's previous position, it will fail here. The correct pairing maybe try checking current position vs current position if the usual linking fail.

Cancelled phase

I observe that we will get Cancelled phase when having a touch down then either trying to massage the whole screen with your entire hand, slap on the screen, changing screen orientation, or prssing home button while having touches down. Not sure if there are other circumstances or not but be prepared for them! There are 4 kinds of them.

- In the case that previous one was Moved it can be linked in chain if this Cancelled is supposed to be Moved or...

- Ended up like the Moved-Ended special case.

- In the case that the previous one was Began it will be the same (position = previous position).

- The last problematic case for Cancelled is that some touch are not even linked up with any of the previous one.

All cases except the last one, you should treat the Cancelled as Ended and it will be fine. For example if holding down before rotating the screen, all touches are Cancelled. At this moment your fingers are still on the screen but because the actual state had already been all Cancelled you can safely lift up fingers without triggering any Ended (and so you must manually treat those as Ended for your touch receiving code). This example shows a sequence that occur when I massage the screen once. Both Cancelled is of the 3rd kind. (Follows immediately from Began)

-- Callback type : Began --

XY [1231, 1131] PrevXY [1231, 1131] Began <--- 1

XY [468, 353] PrevXY [468, 353] Began

XY [890, 310] PrevXY [890, 310] Began

Update

-- Callback type : Began --

XY [1086, 898] PrevXY [1086, 898] Began

XY [789, 723] PrevXY [789, 723] Began

XY [1297, 1039] PrevXY [1297, 1039] Began

XY [1213, 602] PrevXY [1213, 602] Began

XY [1401, 814] PrevXY [1401, 814] Began

-- Callback type : Moved --

XY [868, 358] PrevXY [890, 310] Moved

XY [457, 450] PrevXY [468, 353] Moved

-- Callback type : Cancelled --

XY [1231, 1131] PrevXY [1231, 1131] Cancelled <--- 1

-- Callback type : Began --

XY [294, 1630] PrevXY [294, 1630] Began <--- 2

-- Callback type : Moved --

XY [1095, 822] PrevXY [1086, 898] Moved

XY [853, 410] PrevXY [868, 358] Moved

XY [451, 425] PrevXY [457, 450] Moved

XY [1221, 526] PrevXY [1213, 602] Moved

XY [1406, 787] PrevXY [1401, 814] Moved

-- Callback type : Ended --

XY [789, 723] PrevXY [789, 723] Ended

XY [1297, 1039] PrevXY [1297, 1039] Ended

Update

-- Callback type : Moved --

XY [1104, 733] PrevXY [1095, 822] Moved

XY [449, 345] PrevXY [451, 425] Moved

XY [1230, 437] PrevXY [1221, 526] Moved

XY [1399, 768] PrevXY [1406, 787] Moved

-- Callback type : Ended --

XY [853, 410] PrevXY [868, 358] Ended

-- Callback type : Cancelled --

XY [294, 1630] PrevXY [294, 1630] Cancelled <--- 2

-- Callback type : Began --

XY [11, 1073] PrevXY [11, 1073] Began

-- Callback type : Moved --

XY [1370, 761] PrevXY [1399, 768] MovedPressing home button

-- Callback type : Moved --

XY [410, 941] PrevXY [343, 879] Moved

XY [1211, 930] PrevXY [1065, 948] Moved

XY [587, 1151] PrevXY [526, 1098] Moved

XY [1018, 1147] PrevXY [867, 1135] Moved

XY [1330, 184] PrevXY [1219, 286] Moved

Update

-- Callback type : Moved --

XY [722, 772] PrevXY [410, 941] Moved <--- 1

XY [1450, 520] PrevXY [1211, 930] Moved <--- 2

XY [1008, 913] PrevXY [587, 1151] Moved <--- 3

XY [1326, 828] PrevXY [1018, 1147] Moved <--- 4

XY [999, 344] PrevXY [1330, 184] Moved <--- 5

-> applicationWillResignActive()

Update

-- Callback type : Moved --

XY [675, 747] PrevXY [722, 772] Moved <--- 1

XY [1410, 518] PrevXY [1450, 520] Moved <--- 2

XY [951, 896] PrevXY [1008, 913] Moved <--- 3

XY [1266, 828] PrevXY [1326, 828] Moved <--- 4

-- Callback type : Cancelled --

XY [999, 344] PrevXY [1330, 184] Cancelled <--- 5 (OK)

-- Callback type : Moved --

XY [1200, 626] PrevXY [1286, 566] Moved <--- From nowhere

XY [1011, 885] PrevXY [1111, 860] Moved <--- From nowhere (7)

XY [683, 858] PrevXY [779, 873] Moved <--- From nowhere

-- Callback type : Cancelled --

XY [536, 696] PrevXY [614, 724] Cancelled <--- From nowhere

-- Callback type : Moved --

XY [757, 560] PrevXY [1089, 706] Moved <--- From nowhere (9)

XY [766, 1120] PrevXY [556, 829] Moved

-- Callback type : Cancelled --

XY [864, 904] PrevXY [1011, 885] Cancelled <--- 7

-- Callback type : Moved --

XY [645, 1252] PrevXY [700, 1203] Moved

-- Callback type : Cancelled --

XY [755, 664] PrevXY [757, 560] Cancelled <--- 9

-- Callback type : Cancelled --

XY [594, 1404] PrevXY [611, 1315] Cancelled

-> applicationDidEnterBackground()

-> applicationWillEnterForeground()

-> applicationDidBecomeActive()

Update (Crash!)

After pressing home button while having 5 fingers down, you get a signal -> applicationWillResignActive(). After this is one last Unity update followed by last chance for touch callbacks without Unity's Update. In this last batch of callbacks we see some anomalies. A series of Moved which does not really connects to any of the previous Moved. And then some Cancelled with coordinate close to the previous one but not exact. Some Cancelled even linked from some of those mysterious Moved. The best we could do is to "panic" when entering background and reset the touch receiving code, so that when we come back to the game it is as if nothing happened and start fresh.

Using the OnApplicationPause(bool) of MonoBehaviour, then you must "panic" when that bool is false (coming back from pause) because on the true moment it is just after -> applicationWillResignActive(), which is still way before the last Update and before those errornous touches.

VS Unity's API

When you get only 1 callback, of course Unity is going to reflect that in the next frame. This brings us to some interesting questions : what about when "queued touches" happens? All tests in this section was done with only 1 finger.

Moved-Ended : Missing movement

Load image (1.6 MB)- Red : Native Touch callback is Began, Unity follows one frame later. Nothing special here. Native touch's began always have the previous coordinate the same as current one, while Unity set the delta as 0 to signify the same thing.

- Blue/green : This is Moved-Ended. Unity's job is to present a data in such a way that connects to the previous one. The only one course of action is to connect green to red, or else the API's user will never see a touch ending while the actual native data have already ended.

Without Native Touch : We permanently lose the blue movement data just before the Ended.

Began-Moved : Delta-ed Began

Load image (1.9 MB)- Red/magenta : I touch down in a sliding way so that the Began-Moved sticks together. In this case you does not lose data as Unity have a way to "encode" that : as a delta of the Began phase. I will call this "delta-ed Began" from now on. Remember that the usual Unity Began has delta as 0.

- Green : This is just Moved, and Unity have no problem connecting it to the previous one.

Without Native Touch : We lose nothing, but remember to nab the first bit of movement from the delta of Began.

3 Callbacks : ID Branching

Load image (2.9 MB)- Red/magenta : The same as previous ones, I try to make it start with delta-ed Began.

- Green/blue/turquoise : These are 3 callbacks of Moved-Ended-Began. Unity has only 1 Began from prior to work with, how can Unity connect 3 events to that? First the priority is on the Ended event. Unity connected the blue Ended with the previous magenta data. What's left are Moved and Began, the next priority is on the turquoise Began. It starts this as a new touch ID = 1 with phase Began. Green Moved remaining cannot appear out of nowhere and have nothing to pair with, and thus discarded.

- Orange/purple : This is Moved-Ended. We have ended the touch ID = 0 earlier and so the only choice is to end the touch ID = 1 with the new purple Ended.

Without Native Touch : We lose both green and orange movement before Ended. Also the touch ID looks like an another finger touched down at the same frame the first finger goes up, but in reality this sequence of data was produced with only 1 finger. Remember that on iOS natively we don't have an finger ID. And so the finger ID Unity made up sometimes does not mean multitouch.

You could interpret this as a "bug" in Unity : imagine we don't have Native Touch, even with a single touch you could randomly get touch ID = 1 if the game lags at a certain time. Your logic have to always be prepared for Length > 1 by default and you are safe on iOS.

4 Callbacks : Ended-as-Began

Load image (2.3 MB)- Red/Green/blue/magenta : I touch the screen down in a sliding movement and immediately up and down again very fast while the device is quite busy, resulting in Began-Moved-Ended-Began 4-callbacks. This time Unity used the Ended as delta-ed Began pairing with the first actual Began. I call this "Ended-as-Began". What's left are green Moved and magenta Began. Following the same reasoning, the choice is to use the Began as new ID = 1 and discard the Moved which cannot appear out of nowhere. This way Unity instantly starts with touch ID = 0 and ID = 1.

- Turquoise/orange : This is Moved-Ended. It create a difficult problem for Unity as Unity is now holding Began + Began. The resolution is that first Unity use the Ended. I don't know how Unity choose which one to pair with as they are both possible, but this time Unity choose to end the touch ID = 1. The remaining Moved cannot be paired with Began for the same reason that the touch would never Ended for the API user. So this time Unity Ended the remaining touch as a last resort. The Moved was then discarded as usual.

Without Native Touch : It looks like a pair of Began + Began then Ended + Ended from the API user standpoint, but in reality it is only one finger doing double tap not 2 fingers of single tap + a bit of sliding movement like the data suggest. Also we have lose the turquoise movement.

The final takeaway is this : Native Touch's callback is the ground truth. What you get from Unity's input API is Unity's interpretation of truth. Using Native Touch, even if you are not gaining any speed advantages you are getting missing data that Unity discarded. What to do with them is up to you.

Android

Android touch is also callback based

Yes the same idea as iOS, with same callback up/down/move/cancelled without stationary.

BUT the important not-so-obvious point changes :

- Android touch DOES provide finger/pointer ID.

- But Android touch DOES NOT provide previous point of each touch event. Only the current point is given. That means to infer movement you have to work with pairing the same touch ID as the previous entry and take the differential by yourself. (This is kind of inverse of what iOS gives you...)

- Other features available/unavailable you have to see the Reference page.

What is really happening natively

Get ready for chaos. To understand what Native Touch is doing first we have to learn what pure Android developer have to do to process touch in a native Android app.

One Android callback is called an "action". It has one MotionEvent. Inside it contains multiple touches. This is like iOS, one callback at native side contains multiple touches.

It also has the concept of "historical value" which is not equal to "previous value". (So Android is still lacking previous touch value like I said) For example a touch contains position X = 5 and has 2 historical positions X as 2 and 4. The previous position of this touch might not be at neither 2 or 4 but might be at -1. (So yes you still need to remember) But this means at this time the touch is at X = 5 it has moved past X = 2 and X = 4 before arriving at X = 5. Anyways, Native Touch currently discards all historical position to be in-line with iOS.

The problem that follows is, in this "bundle of touches" (pack) there is only one phase/state variable that comes tagged with it. If currently 3 fingers are down and the MotionEvent says "move", it means one of them moved but we are not sure which one. (Have to infer by yourself) If the MotionEvent says "down" then one of them just go down. Luckily with up and down (not moved) we can know which one just do that thanks to availability of "pointer ID" in Android + the definition that one action only contains one "action index" no matter how many touches in this action and it can get us the pointer ID that cause the action state as said on the motion event. But still, we don't know the state of the other two whether they stay still or moved. With careful position + touch ID bookkeeping (not provided by Android touch API) we can surely know if the other touches moved or staying still. This is certainly what Unity is doing for us since Unity has stationary phase available.

The other thing about Android's phase is, there are 2 version of down. For example : MotionEvent.ACTION_DOWN and MotionEvent.ACTION_POINTER_DOWN.

The latter (containing "POINTER") is when one touch already down, then an another touch down you will get "POINTER" phase. But even if the motion event says that, the other already-down touch might be moving at the same time as a new touch is down and the result at the native side still only says MotionEvent.ACTION_POINTER_DOWN, nothing to indicate the status of the other touch at all whether it stay still or moved. If it says just "ACTION_DOWN" then this is the first touch in the MotionEvent bundle, and surely it just went down (easy case). Same goes for up. More gotchas, there is no ACTION_POINTER_MOVE.

The phase is only indicative of the new touch. If there are no new touch, then MotionEvent.ACTION_MOVE signify that at least one of the currently down touch moves.

This raise an another question : is it possible to have more than one non-MOVE action per one motion event? For an example scenario, a motion event containing 3 touches. Touch #1 just down, touch #2 just up, touch #3 might have moved or not. In this case what will be the action that is attached to the motion event? Because there is only 1 action, if it says touch ID 1 DOWN then we cannot assume anymore that the other two is either moved or stationary, since one of them went up.

From my experiment, this case cannot ever happen. If 2 fingers is down and up so fast at roughly the same time you would still get 2 separated action (motion event) callback. One with DOWN action (other fingers are either staying still or moved) and another callback with UP (other fingers including the new one just down earlier are either staying still or moved). In one motion event you can only have 1 up or 1 down so it is safe to assume other fingers as either moved or stationary.

The unpacking of touches to Native Touch callback, and a conversion to be in line with iOS

Like iOS, I unpack the MotionEvent and report each touch consecutively in a separated callback as one of these states : MotionEvent.ACTION_DOWN, MotionEvent.ACTION_UP, MotionEvent.ACTION_MOVE, and MotionEvent.ACTION_CANCEL (rarely happen). You will get those phase in int value and you will not get the POINTER variant of the phase. Each callback contains position, NO previous position, WITH pointer ID, phase, and timestamp.

But, natively in Android those int value does not line up with Unity's TouchPhase (reference) (Began-Moved-Stationary-Ended-Canceled 0-1-2-3-4) and does not line up with iOS's int phase (reference) (began-moved-stationary-ended-cancelled 0-1-2-3-4) In Android it is like this (reference) : (DOWN-UP-MOVE-CANCEL-OUTSIDE-POINTERDOWN-POINTERUP 0-1-2-3-4-5-6) So I decided to do an another conversion that is MOVE will be 1 instead of 2, UP is 3 instead of 1, CANCEL will be 4 instead of 3. So you are now able to directly get Unity's TouchPhase from both iOS and Android side.

No stationary phase

Remember that in reality MotionEvent only contains one action phase indicator (in contrast to iOS where inside the bundle we can easily know each touch's phase) so how did I "made up" the phase in the case of multitouch? The rule I use is every time I "unpack" the motion event, the touch that is just went up or down will 100% get up and down phase. But all other touches at that time will be reported as MOVE without caring if they really moved or not. Unlike iOS, Android does not even has the "stationary" phase defined.

- Example #1 : Currently 3 fingers down then one of the finger moved. In iOS, we get 3 callbacks of moved-stationary-stationary. In Android, we get 3 callbacks of moved-moved-moved.

- Example #2 : Currently 2 fingers down then a new 3rd finger touched the screen. In iOS, we get 3 callbacks of down-stationary-stationary. In Android, we get 3 callbacks of down-moved-moved.

So looking from callback logic perspective in your game, in iOS you infer stationary by both reading the stationary phase or inferring the lack of up of a particular touch (then it must be currently down and not moving = stationary). In Android, additionally when you received any MOVED event you have to check if it really moved or not. The lack of up = stationary still applies.

The strange phenomena "double pack"

You learned that Android's touch is packed and I unpack it. Good! That's not all though because sometimes you will mysteriously get a tons more Moved data with nothing actually happening at all. Look at this real situation.

Touch with index, middle, ring, pinky in this order, then you get a touch ID 0,1,2,3 assigned to each finger in order, right? According to what you learned, on each touch this is the amount and details of unpacked callbacks. Please remember that each pack, there are multiple coordinate, sure, but there is only 1 touch state specified and an ID telling which touch ID that state belongs to. (So the others must be inferred)

- Index touch - 1 touch in this pack - Began ID 0 - Native Touch sends 1 callback of Began ID 0

- Middle touch - 2 touches in this pack - Began ID 1 - Native Touch sends 2 callbacks of Moved ID 0, Began ID 1

- Ring touch - 3 touches in this pack - Began ID 2 - Native Touch sends 3 callbacks of Moved ID 0,1, Began ID 2

- Pinky touch - 4 touches in this pack - Began ID 3 - Native Touch sends 4 callbacks of Moved ID 0,1,2 Began ID 3

Those are perfectly reasonable from what we learned so far. In this 4 fingers down state, gently lift up any finger so that it is not moving even one bit. From what we learned you could guess that :

- If I lift up the pinky finger it would result in this : "4 touches in this pack - Ended ID 3 - Native Touch sends 4 callbacks of Moved ID 0,1,2 Ended ID 4".

- If I lift up the ring finger it would result in this : "4 touches in this pack - Ended ID 2 - Native Touch sends 4 callbacks of Moved ID 0,1,3 Ended ID 2".

But who knows it is different if you lift up either index or middle finger instead??

-

If I lift up the middle finger it would result in 2 consecutive packs with exactly the same timestamp!

- 4 touches in this pack - Ended ID 1. This is reasonable, so Native Touch sends 4 callbacks of Moved 0,2,3 Ended 1.

- 3 touches in this pack - Moved ID 0. This is weird. Nothing moves because we just lift up a finger! The only correct thing is that there are 3 touches left, one was just up.

-

If I lift up the index finger it would result in 2 consecutive packs with exactly the same timestamp again!

- 4 touches in this pack - Ended ID 0. This is reasonable, so Native Touch sends 4 callbacks of Moved 1,2,3 Ended 0.

- 3 touches in this pack - Moved ID 1. Again this is weird, we didn't move.

I call this "double pack". The 2nd unwanted pack always have a phase Moved with ID = the lowest number available at that time. I have investigated that the condition for this to occur is having 3 or more touches BEFORE lifting one of them + the touch going up needs to be either ID 0 or ID 1. In that case, a mysterious useless call will follow.

Double pack can also occur on touch down, not just up. But the order of useless callback is reversed, the useless Moved phase comes before the real Began. This is easily reproducable in 4 fingers down scenario, just lift up either index or middle finger (produce "double pack up") then put it down again because Android will find the lowest unoccupied ID to use, and if that is 0 or 1 a "double pack down" will occur. The condition for "double pack down" is almost the same, you must have 3 or more touches AFTER touching down and that touch has to take ID 0 or 1. So the only way for this to happen is making the ID 0 or 1 vacant after having ID 2 and 3 or more occupied, then the next touch you could reproduce this bug with any touch.

To be clearer of the rule, imagine this more complex scenario. 5 fingers sequentially from thumb to pinky. If I lift up the thumb then the index, that should left ID 2,3,4 holding down and cause 2 "double pack up" because the ID that goes vacant was both 0 and 1. If I then lift up the pinky it will NOT cause the double pack since ID 4 just goes up, but if I place the pinky down again it will cause a "double pack down" since pinky will occupy the vacant ID 0 instead of the old ID 4, and with finger count that goes from 2 to 3 it satisfy all conditions for a double pack to occur.

Finally the most important, you understand that this is not Native Touch's bug. Phew. Native Touch will NOT try to ignore the "double pack" since I fear that this might be an unintended behaviour in Android (It is not documented anywhere, if someone know why this is happening please inform me.) If it fixes itself some time in the future the ignore code might cause problem, on the other hand, not ignoring will not cause any wrong behaviour since your game should be already ready to interpret any Moved data that stay still as Stationary. It is just more callbacks. When this is happening, for 3 fingers multitouch you get 5 calls instead of 3, for 4 fingers multitouch you get 7 calls instead of 4. Basically (n+(n-1))/n times more than intended callback count filled with useless Moved with perfectly still coordinates.

The threaded timing of Android touch

Surprise, Android touch's static callback is NOT IN THE MAIN THREAD.

The question is how can that be? The callback was definitely called from the "main thread" in Java side because it is one of the first line in the main activity code, surely we not yet had any chance to spawn a thread. The answer could be that Unity considers that a non-main thread, because on Android Unity itself is running on a thread with the Runnable API. And so if the thread Unity is currently on is the definition of "main thread", where we call the callback is not the main thread anymore.

For timing perspective this is actually a blessing, since the position of callback now does not get fixed to certain place like in iOS plus the logic runs in parallel with your normal Unity code. This results in you can sometimes gain more than 1 frame faster if you are lucky. In my test I have some touch that is 2 frames faster than Unity's event trigger.

Before when you touch the screen you will always get this :

[Frame 499] (U1) (U2) (U3) - [Frame 500] (Event Trigger) (U1) (U2) (U3)

With Native Touch Android you get this :

[Frame 499] (U1) (U2) (U3) - [Frame 500] (Event Trigger) (U1) (U2) (U3)

|------ (NATIVE TOUCH) ------|

This means even in-between lines of code in your static touch callback code on Android, your other MonoBehaviour code can run in parallel.

The hard crash

And so anything that is only main-thread compatible will not work in that static method scope. This includes most of Unity's API from UnityEngine for example Time.___, setting any transform, setting anything that changes the UI, etc.

But also the difficulty has been turned to the max. Previously if you use C# Jobs System, C# Task Parallel Library, ECS Jobs, etc. You get something like this if you try to get Time.frameCount from the thread :

08-22 12:43:00.463 5518-5518/com.Exceed7.NativeTouch E/Unity: get_frameCount can only be called from the main thread.

Constructors and field initializers will be executed from the loading thread when loading a scene.

Don't use this function in the constructor or field initializers, instead move initialization code to the Awake or Start function.

UnityEngine.Time:get_frameCount()

NativeTouchDemo:NativeTouchStaticReceiver(Int32, Int32, Int32, Int32, Int32, Double, Int32) (at /Users/Sargon/Documents/Projects/NativeTouch/Assets/NativeTouch/Demo/NativeTouchDemo.cs:62)

E7.Native.TouchProxy:Invoke(String, Object[]) (at /Users/Sargon/Documents/Projects/NativeTouch/Assets/NativeTouch/Managed/NativeTouchAndroid.cs:58)

UnityEngine.AndroidJavaProxy:Invoke(String, AndroidJavaObject[]) (at /Users/builduser/buildslave/unity/build/Runtime/Export/AndroidJavaImpl.cs:119)

UnityEngine._AndroidJNIHelper:InvokeJavaProxyMethod(AndroidJavaProxy, IntPtr, IntPtr) (at /Users/builduser/buildslave/unity/build/Runtime/Export/AndroidJavaImpl.cs:745)But now, the static code was called from native side, the C language part. You have no protection of C#'s error catching anymore. Even though you are writing C# please keep in mind that any crash will be more crazy. (For C++ programmers you might know what I am talking about.)

For example using Time.frameCount/realtimeSinceStartup/etc in the callback now results in... sometimes a success! Isn't that nice! But actually it is just that you are lucky that you broke the safety throw and the API is just a read-only value. (On some Unity version it throws the friendly error.. in short just don't do it.) What about audioSource.PlayOneShot()? If you do that, you will get 100% SIGILL hard crash where previously you will get a friendly readable error telling you not to do that in the sub-thread.

Function SoundHandleAPI *SoundHandle::operator->() const may only be called from main thread!

09-05 12:53:00.106 16448 16448 E mono : Unhandled Exception: System.ExecutionEngineException: SIGILL

09-05 12:53:00.106 16448 16448 E mono : at UnityEngine.AudioSource.Play () [0x00000] in filename unknown:0

09-05 12:53:00.106 16448 16448 E mono : at NativeTouchDemo.NativeTouchStaticReceiver (NativeTouchData ntd) [0x00000] in filename unknown:0

09-05 12:53:00.106 16448 16448 E mono : at (wrapper delegate-invoke) E7.Native.NativeTouch/MinimalDelegate:invoke_void__this___NativeTouchData (E7.Native.NativeTouchData)

09-05 12:53:00.106 16448 16448 E mono : at E7.Native.NativeTouchInterface.NativeTouchMinimalCallback (NativeTouchData ntd) [0x00000] in filename unknown:0

09-05 12:53:00.106 16448 16448 E mono : at (wrapper native-to-managed) E7.Native.NativeTouchInterface:NativeTouchMinimalCallback (E7.Native.NativeTouchData)

09-05 12:53:00.120 16448 16467 I Adreno : DequeueBuffer: dequeueBuffer failed

If you try to change uGUI's Text, you have about 10% chance to fail and crash the main thread, leaving only the Native Touch's not-main-thread alive. This is because the change is parallel, and you are unlucky that you change the text as it is going to draw.

09-05 12:58:06.723 16982 16982 Unity E Trying to add Content (UnityEngine.UI.Text) for graphic rebuild while we are already inside a graphic rebuild loop. This is not supported.

09-05 12:58:06.723 16982 16982 Unity E (Filename: ./Runtime/Export/Debug.bindings.hLine: 43)So, be careful when you trial and error what is possible on the other thread because it might be a success only initially like the last example. Checkout Jackson Dunstan's excellent article where he collected things that are job-safe.

Also what about an exception from your own mistakes? In your course of Unity development, when you hit NullReferenceException for example usually your game can continue, in a wrong way or not depending on the code because it was caught. It is like the code just stop continuing at that point, but the game loop runner remains running. However if you hit NullReferenceException in a touch callback code of Android which is not in the main-thread there is no catch. The game will freeze! Both case you should fix anyways but just a heads up that the crash behaviour might be surprising.

Multitouch behaviour

Like iOS but together in the same "not-main-thread".

How to make use of the touch from a different thread then?

You can talk to the main thread by passing data via static variables. But you have to be careful not to simultaneously read-write that area of memory at the same time as something else in your main thread. For example putting all the touches in one of the concurrent collections or use the lock keyword to ensure mutual exclusivity of some code areas. Or you might get unexpected result. This make that variable a kind of main thread sync point for your touches.

Kinds of advantage patterns in Android

1 frame advantage

The same rule with iOS is still in effect : when you ask it, it answer you with the captured touch from the previous frame.

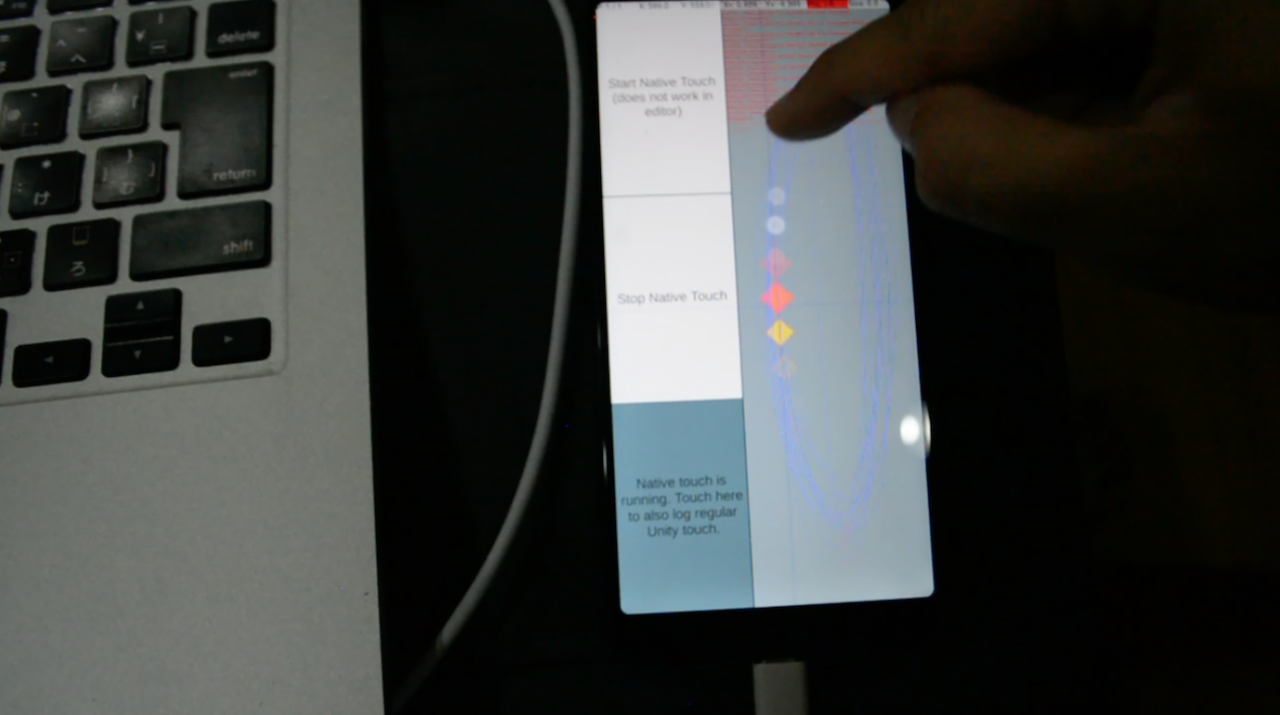

But on Android we are lucky! Look at this image :

Load image (1.7 MB)You see Unity is using the touch from the prior frame. But our callback is positioned at the beginning of this same frame with a fresh touch data. That means it is possible to use this data in place of Unity's outdated touch data, unlike iOS which we cannot make it in time. You can see where Unity use the red data, we can already use the yellow data. Where Unity will use the yellow data we can use magenta data and so on.

Doing this to set a position of something results in 1 frame advantage because it is still not too late for rendering. As in the included demo, I have set the red square to follow the Native Touch data and yellow square to follow normal Unity's API. Both has been set in the same Update() but the red ones can go ahead of yellow, because yellow square used a touch data of the previous frame. Native Touch make it possible to be more up to date.

NO frame advantage

Next, at the bottom of the first image you see that in the same frame that we get magenta data, we also get one another green data. This image shows what is the effect of this :

Load image (1.8 MB)In the next frame we have no callback because the previous frame got 2 callbacks, at beginning and again at the end. (As I told you Android's callback is threaded and the order is mostly free flowing.) This frame Unity is still following the rule : using the data from the previous frame, the green data. Notice that magenta data have been completely skipped by Unity. At this frame there is no Native Touch callback, so we must also use the green data. When this kind of pattern happen we get NO frame advantage as we at best have to use the data of the previous frame, same as Unity.

NO frame advantage -> duplicated Unity touch.

When this "NO frame advantage" is happening, imagine that we don't have Native Touch. We get 2 consecutive frame of the same touch data from Unity's touch API in frame 20619 and 20620. In this moment, the latter one will have a Stationary phase. Depending on how you view this, you can think of this as a "bug" of Unity that reports Stationary phase touch even when continuously moving a finger. (In reality we are always moving, and Native Touch reports as such.)

Load image (1.1 MB)This duplicated Unity touch will occur 1 frame after a frame that we had NO frame advantage. This image highlight this one last time with Unity using the red data 2 times. Also look at where Native Touch get the red data, it highlights that the callback is in parallel since it started before U2 yet ended after U3.

Clutch-1 frame advantage

Load image (2.7 MB)This is one weird case that Unity use the same coordinate even if we did managed to get a new magenta data just before the end of frame 3931. The next frame 3932, Unity still use the same yellow data (with Stationary phase, as explained but this time I added the log to be more explicit). In this case we magically gain 1 frame advantage even in a frame without a callback, where this is explained in the NO frame advantage section as not possible.

Load image (1.6 MB)An another case, here you see we get 2 consecutive callbacks before the end of previous frame. (1 finger only) But Unity then did not choose the latest red data but rather the older yellow data. In this case, also we can gain 1 frame advantage by using the red data.

The mysterious problem with Vulkan

Remembered in the demo you can drag really fast and always have the red square go ahead of yellow square by the effect of 1 frame advantage? (Turn off VSync to more easily see this)

Somehow, if you uncheck "Auto Graphics API" and then put Vulkan over OpenGLES2/3, and if the device do get to use Vulkan, now you cannot make the red ones go ahead of yellow ones anymore! Oh no! (No matter how fast you try, they will be completely overlapped except for some rare frames that red is ahead of yellow.)

This is what consistently happening on Vulkan.

Load image (1.3 MB)For some reason the position of most callbacks has been moved to the end of the frame rather that at the beginning. The touch contains a new data however we don't have a chance to use it in this frame anymore. (This is exactly like iOS's case) This results in always NO frame advantage unfortunately, but remember you can still do something else faster, and you still get to keep that real timestamp to use later.

I have no idea why a graphic API can change the script execution order, but I guess might be that, the rendering is now have to share the same resource as the callback thread and it caused this priority change.

Protip : Perfect combination with Native Audio

You definitely not want to miss this if your goal of using Native Touch is to gain faster audio response from touch. Unity's audioSource.Play() is also main-thread only operation, so you must sync the touch to main thread first if the touch is to decide whether to activate a sound feedback or not. Sometimes it is too late as the sync point had already passed in this frame of main-thread-world, so your faster touch ended up useless for playing faster audio. In the worst case you get the same performance as using normal Unity touch. Remember that Unity process audio commands together at the end-frame so all that matters for audioSource.Play() is to make it before the end of the frame, or you end up with a draw with normal way.

However with Native Audio which can ask Android to play audio natively and immediately independent of frame concept in Unity, it is actually usable from any threads. Meaning that your faster touch now translates directly to FREE lower audio latency regardless of device's inherent latency. If you get 2 frames earlier in 60 FPS game that's already 33.32ms faster compared to normal Unity's event system input to play audio. This is significant because it is an equivalent of the width of highest judgement window in many music games.

So you could do a reverse of previous section. The main thread should prepare enough data to decide if the touch should play sound or not in a mutex collection variable. When the touch arrives it can check and issue a Native Audio play immediately.

If you have native-anything plugin from other plugin developers, I think it is likely that it will work executing directly in this Android threaded callback.

VS Unity's API

As far as I know it follows the same idea as iOS, the delta-ed Began is there. The discard of Moved in the case of ambiguity is there. However, remember that in Android we natively have the touch/finger ID! And so, with only one finger doing whatever to the screen I have never seen Unity generate a touch with ID = 1 like the case in iOS, because it is possible to make sure in Android that the touch is 100% a single touch from the finger ID. No need for emergency resolution like in iOS. (This is my deduction, not a fact)

And with that "must be 1 touch" constraint in place, it changes how Unity choose the data in the case of multiple callbacks.

Aggressive data loss

Load image (1 MB)This time the rule's easy! When Unity get more than one Moved it just have to choose one and discard the rest... here in this picture Unity simply chosen the last one from each pack. All other data I have marked X just gone without any relationship to what you get from Unity. (Unlike in iOS, where Unity spawn a touch with other ID and kind of pack them up)

Without Native Touch : We lose n-1 movement events each frame if there are multiple n movement events present in the frame. Note that this is not even a "historical touch" feature of Android. All n movement events are perfectly linked to each other.

Late Began

Load image (2 MB)In this image, Unity supposed to be able to use the red Began in the frame 12257 (as per 1 frame prior rule) but it didn't. Maybe it is because the data came too late in the previous frame. Anyways Unity will miss 3 more Moved events in this frame. The next frame Unity finally Began the touch, but with what coordinate knowing that 5 data had already passed? This one Unity choose the 4th data, but I am not sure how it was selected. Also it uses the delta-ed Began technique, but this time I don't know the origin of undelta-ed coordinate but it seems to be very close to the red data. (But not exact)

The next touches was choosen from the last of a pack of multiple Moved like before.

Without Native Touch : We got only 3 representative Began-Moved-Moved derived from the actual 9 callbacks Began-Moved-Moved-Moved-Moved-Moved-Moved-Moved-Moved.

More unique patterns not presented here might still exist, but I think these example are enough for you to write your own "wrapper" or discover more patterns. The point is that with Native Touch we can get these discarded data.